Pilote de stockage BTRFS

Btrfs est un système de fichiers copy-on-write qui prend en charge de nombreuses technologies de stockage avancées, ce qui en fait un bon choix pour Docker. Btrfs est inclus dans le noyau Linux principal.

Le pilote de stockage btrfs de Docker tire parti de nombreuses fonctionnalités Btrfs pour la gestion des images et

conteneurs. Parmi ces fonctionnalités se trouvent les opérations au niveau des blocs, le

provisionnement mince, les snapshots copy-on-write, et la facilité d'administration. Vous pouvez

combiner plusieurs périphériques de bloc physiques en un seul système de fichiers Btrfs.

Cette page fait référence au pilote de stockage Btrfs de Docker sous le nom btrfs et au

système de fichiers Btrfs global sous le nom Btrfs.

NoteLe pilote de stockage

btrfsn'est pris en charge qu'avec Docker Engine CE sur les systèmes SLES, Ubuntu, et Debian.

Prérequis

btrfs est pris en charge si vous respectez les prérequis suivants :

-

btrfsn'est recommandé qu'avec Docker CE sur les systèmes Ubuntu ou Debian. -

Changer le pilote de stockage rend tous les conteneurs que vous avez déjà créés inaccessibles sur le système local. Utilisez

docker savepour sauvegarder les conteneurs, et poussez les images existantes vers Docker Hub ou un registre privé, afin de ne pas avoir besoin de les recréer plus tard. -

btrfsnécessite un périphérique de stockage de bloc dédié tel qu'un disque physique. Ce périphérique de bloc doit être formaté pour Btrfs et monté dans/var/lib/docker/. Les instructions de configuration ci-dessous vous guident à travers cette procédure. Par défaut, le système de fichiers/SLES est formaté avec Btrfs, donc pour SLES, vous n'avez pas besoin d'utiliser un périphérique de bloc séparé, mais vous pouvez choisir de le faire pour des raisons de performance. -

Le support

btrfsdoit exister dans votre noyau. Pour vérifier cela, exécutez la commande suivante :$ grep btrfs /proc/filesystems btrfs -

Pour gérer les systèmes de fichiers Btrfs au niveau du système d'exploitation, vous avez besoin de la commande

btrfs. Si vous n'avez pas cette commande, installez le paquetbtrfsprogs(SLES) oubtrfs-tools(Ubuntu).

Configurer Docker pour utiliser le pilote de stockage btrfs

Cette procédure est essentiellement identique sur SLES et Ubuntu.

-

Arrêtez Docker.

-

Copiez le contenu de

/var/lib/docker/vers un emplacement de sauvegarde, puis videz le contenu de/var/lib/docker/:$ sudo cp -au /var/lib/docker /var/lib/docker.bk $ sudo rm -rf /var/lib/docker/* -

Formatez votre périphérique de bloc dédié ou vos périphériques comme un système de fichiers Btrfs. Cet exemple suppose que vous utilisez deux périphériques de bloc appelés

/dev/xvdfet/dev/xvdg. Vérifiez bien les noms des périphériques de bloc car c'est une opération destructive.$ sudo mkfs.btrfs -f /dev/xvdf /dev/xvdgIl y a beaucoup plus d'options pour Btrfs, incluant le striping et RAID. Voir la documentation Btrfs.

-

Montez le nouveau système de fichiers Btrfs sur le point de montage

/var/lib/docker/. Vous pouvez spécifier n'importe lequel des périphériques de bloc utilisés pour créer le système de fichiers Btrfs.$ sudo mount -t btrfs /dev/xvdf /var/lib/dockerNoteRendez le changement permanent à travers les redémarrages en ajoutant une entrée à

/etc/fstab. -

Copiez le contenu de

/var/lib/docker.bkvers/var/lib/docker/.$ sudo cp -au /var/lib/docker.bk/* /var/lib/docker/ -

Configurez Docker pour utiliser le pilote de stockage

btrfs. Ceci est requis même si/var/lib/docker/utilise maintenant un système de fichiers Btrfs. Modifiez ou créez le fichier/etc/docker/daemon.json. Si c'est un nouveau fichier, ajoutez le contenu suivant. Si c'est un fichier existant, ajoutez seulement la clé et la valeur, en prenant soin de terminer la ligne avec une virgule si ce n'est pas la ligne finale avant une accolade fermante (}).{ "storage-driver": "btrfs" }Voir toutes les options de stockage pour chaque pilote de stockage dans la documentation de référence du démon

-

Démarrez Docker. Quand il fonctionne, vérifiez que

btrfsest utilisé comme pilote de stockage.$ docker info Containers: 0 Running: 0 Paused: 0 Stopped: 0 Images: 0 Server Version: 17.03.1-ce Storage Driver: btrfs Build Version: Btrfs v4.4 Library Version: 101 <...> -

Quand vous êtes prêt, supprimez le répertoire

/var/lib/docker.bk.

Manage a Btrfs volume

One of the benefits of Btrfs is the ease of managing Btrfs filesystems without the need to unmount the filesystem or restart Docker.

When space gets low, Btrfs automatically expands the volume in chunks of roughly 1 GB.

To add a block device to a Btrfs volume, use the btrfs device add and

btrfs filesystem balance commands.

$ sudo btrfs device add /dev/svdh /var/lib/docker

$ sudo btrfs filesystem balance /var/lib/docker

NoteWhile you can do these operations with Docker running, performance suffers. It might be best to plan an outage window to balance the Btrfs filesystem.

How the btrfs storage driver works

The btrfs storage driver works differently from other

storage drivers in that your entire /var/lib/docker/ directory is stored on a

Btrfs volume.

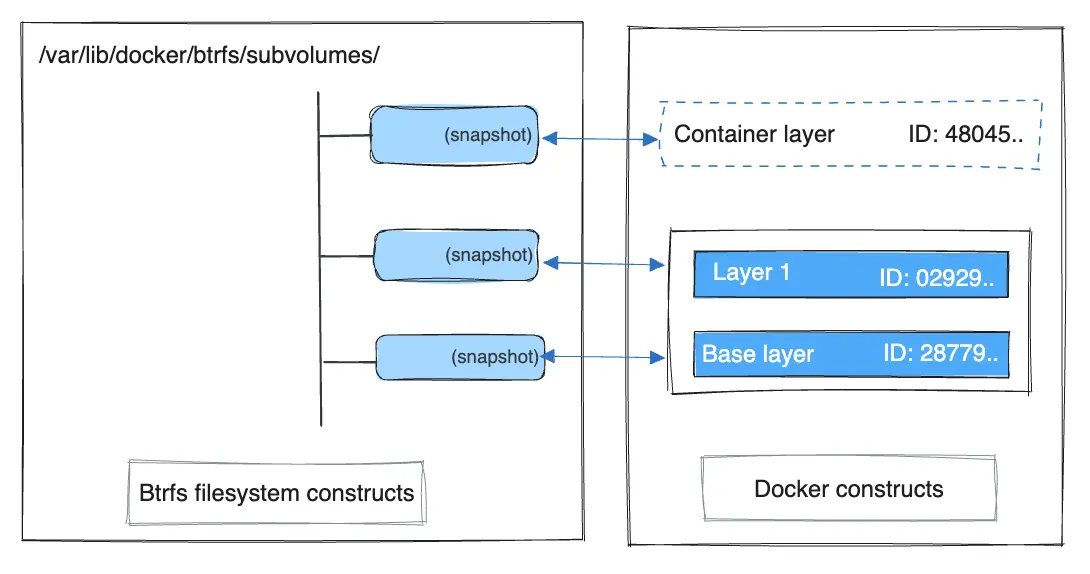

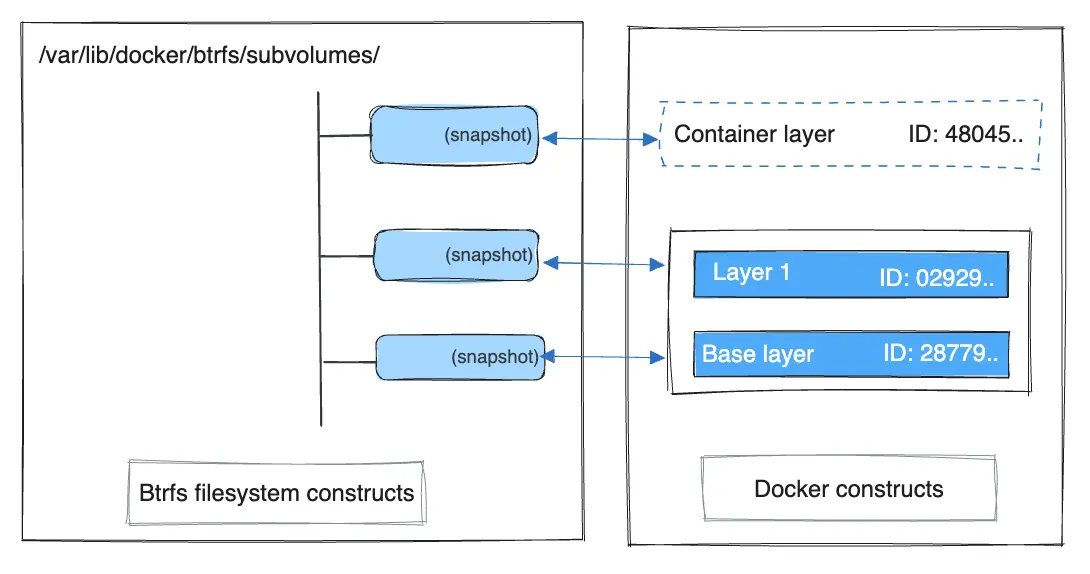

Image and container layers on-disk

Information about image layers and writable container layers is stored in

/var/lib/docker/btrfs/subvolumes/. This subdirectory contains one directory

per image or container layer, with the unified filesystem built from a layer

plus all its parent layers. Subvolumes are natively copy-on-write and have space

allocated to them on-demand from an underlying storage pool. They can also be

nested and snapshotted. The diagram below shows 4 subvolumes. 'Subvolume 2' and

'Subvolume 3' are nested, whereas 'Subvolume 4' shows its own internal directory

tree.

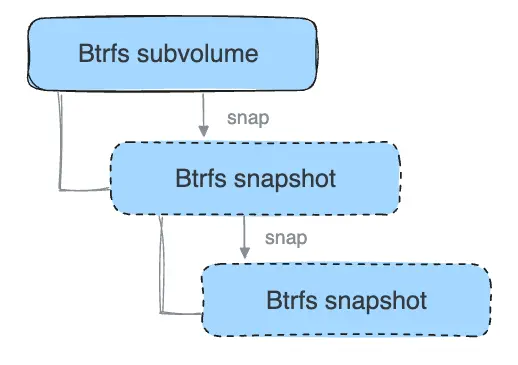

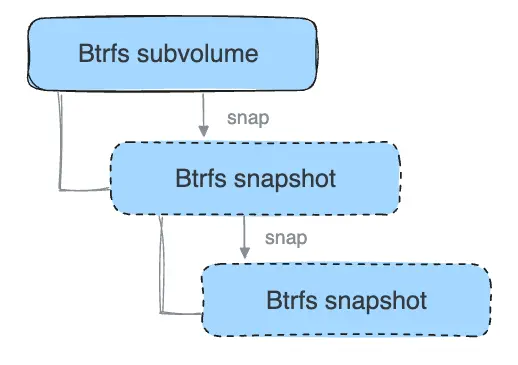

Only the base layer of an image is stored as a true subvolume. All the other layers are stored as snapshots, which only contain the differences introduced in that layer. You can create snapshots of snapshots as shown in the diagram below.

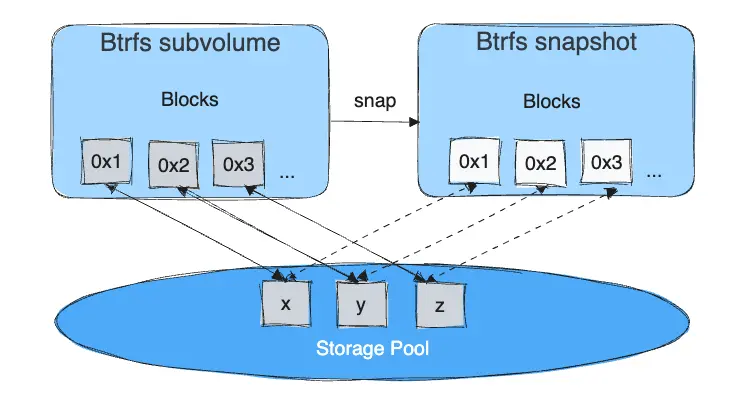

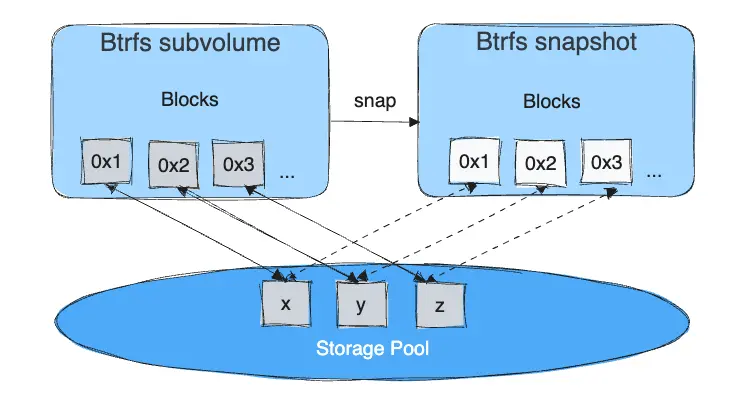

On disk, snapshots look and feel just like subvolumes, but in reality they are much smaller and more space-efficient. Copy-on-write is used to maximize storage efficiency and minimize layer size, and writes in the container's writable layer are managed at the block level. The following image shows a subvolume and its snapshot sharing data.

For maximum efficiency, when a container needs more space, it is allocated in chunks of roughly 1 GB in size.

Docker's btrfs storage driver stores every image layer and container in its

own Btrfs subvolume or snapshot. The base layer of an image is stored as a

subvolume whereas child image layers and containers are stored as snapshots.

This is shown in the diagram below.

The high level process for creating images and containers on Docker hosts

running the btrfs driver is as follows:

-

The image's base layer is stored in a Btrfs subvolume under

/var/lib/docker/btrfs/subvolumes. -

Subsequent image layers are stored as a Btrfs snapshot of the parent layer's subvolume or snapshot, but with the changes introduced by this layer. These differences are stored at the block level.

-

The container's writable layer is a Btrfs snapshot of the final image layer, with the differences introduced by the running container. These differences are stored at the block level.

How container reads and writes work with btrfs

Reading files

A container is a space-efficient snapshot of an image. Metadata in the snapshot points to the actual data blocks in the storage pool. This is the same as with a subvolume. Therefore, reads performed against a snapshot are essentially the same as reads performed against a subvolume.

Writing files

As a general caution, writing and updating a large number of small files with Btrfs can result in slow performance.

Consider three scenarios where a container opens a file for write access with Btrfs.

Writing new files

Writing a new file to a container invokes an allocate-on-demand operation to allocate new data block to the container's snapshot. The file is then written to this new space. The allocate-on-demand operation is native to all writes with Btrfs and is the same as writing new data to a subvolume. As a result, writing new files to a container's snapshot operates at native Btrfs speeds.

Modifying existing files

Updating an existing file in a container is a copy-on-write operation (redirect-on-write is the Btrfs terminology). The original data is read from the layer where the file currently exists, and only the modified blocks are written into the container's writable layer. Next, the Btrfs driver updates the filesystem metadata in the snapshot to point to this new data. This behavior incurs minor overhead.

Deleting files or directories

If a container deletes a file or directory that exists in a lower layer, Btrfs masks the existence of the file or directory in the lower layer. If a container creates a file and then deletes it, this operation is performed in the Btrfs filesystem itself and the space is reclaimed.

Btrfs and Docker performance

There are several factors that influence Docker's performance under the btrfs

storage driver.

NoteMany of these factors are mitigated by using Docker volumes for write-heavy workloads, rather than relying on storing data in the container's writable layer. However, in the case of Btrfs, Docker volumes still suffer from these draw-backs unless

/var/lib/docker/volumes/isn't backed by Btrfs.

Page caching

Btrfs doesn't support page cache sharing. This means that each process

accessing the same file copies the file into the Docker host's memory. As a

result, the btrfs driver may not be the best choice for high-density use cases

such as PaaS.

Small writes

Containers performing lots of small writes (this usage pattern matches what

happens when you start and stop many containers in a short period of time, as

well) can lead to poor use of Btrfs chunks. This can prematurely fill the Btrfs

filesystem and lead to out-of-space conditions on your Docker host. Use btrfs filesys show to closely monitor the amount of free space on your Btrfs device.

Sequential writes

Btrfs uses a journaling technique when writing to disk. This can impact the performance of sequential writes, reducing performance by up to 50%.

Fragmentation

Fragmentation is a natural byproduct of copy-on-write filesystems like Btrfs. Many small random writes can compound this issue. Fragmentation can manifest as CPU spikes when using SSDs or head thrashing when using spinning disks. Either of these issues can harm performance.

If your Linux kernel version is 3.9 or higher, you can enable the autodefrag

feature when mounting a Btrfs volume. Test this feature on your own workloads

before deploying it into production, as some tests have shown a negative impact

on performance.

SSD performance

Btrfs includes native optimizations for SSD media. To enable these features,

mount the Btrfs filesystem with the -o ssd mount option. These optimizations

include enhanced SSD write performance by avoiding optimization such as seek

optimizations that don't apply to solid-state media.

Balance Btrfs filesystems often

Use operating system utilities such as a cron job to balance the Btrfs

filesystem regularly, during non-peak hours. This reclaims unallocated blocks

and helps to prevent the filesystem from filling up unnecessarily. You can't

rebalance a totally full Btrfs filesystem unless you add additional physical

block devices to the filesystem.

See the Btrfs Wiki.

Use fast storage

Solid-state drives (SSDs) provide faster reads and writes than spinning disks.

Use volumes for write-heavy workloads

Volumes provide the best and most predictable performance for write-heavy workloads. This is because they bypass the storage driver and don't incur any of the potential overheads introduced by thin provisioning and copy-on-write. Volumes have other benefits, such as allowing you to share data among containers and persisting even when no running container is using them.